Model evaluation terms

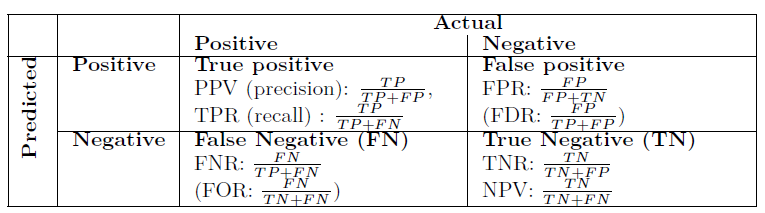

Confusion matrix: In the context of a binary classification algorithm a confusion matrix shows a cross-tabulation of how many of the actual positive/negative results the model has predicted to be positive/negative.

False positive: A false positive indicates an instance where a ML model predicts a positive outcome, but the prediction is wrong because a negative outcome occurs.

False positive Rate: The false positive rate represents the proportion of predicted positive outcomes that are incorrect.

True positive: In the context of predictions made by a classification model, a true positive represents a case where the model predicts a ‘positive’ outcome, and the real outcome is also positive.

True positive rate: The true positive rate represents the proportion of positive outcomes that were predicted as positive by an ML model.

Table 4.3: Confusion matrix for binary classification